Subtitles & vocabulary

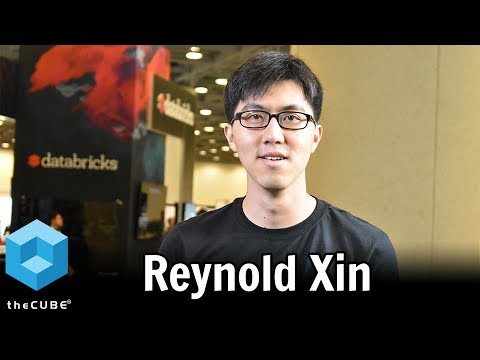

Reynold Xin | Spark Summit 2017

00

黃柏軒 posted on 2019/03/04Save

Video vocabulary

sort

US /sɔrt/

・

UK /sɔ:t/

- Transitive Verb

- To organize things by putting them into groups

- To deal with things in an organized way

- Noun

- Group or class of similar things or people

A1TOEIC

More tremendous

US /trɪˈmɛndəs/

・

UK /trəˈmendəs/

- Adjective

- Very good or very impressive

- Extremely large or great.

B1TOEIC

More effort

US /ˈɛfət/

・

UK /ˈefət/

- Uncountable Noun

- Amount of work used trying to do something

- A conscious exertion of power; a try.

A2TOEIC

More Use Energy

Unlock Vocabulary

Unlock pronunciation, explanations, and filters